[Q&A #02] Bombs vs. Bugs

One of the interesting things for me about this shared space of ours is to be able to see what it is that you most enjoy—or don’t—and where your questions lie. The most common request I’ve heard is about, uh, well:

Closely following that desire for what I’m going to call the occasional shorter piece in a more conversational tone, are requests for practical technical advice:

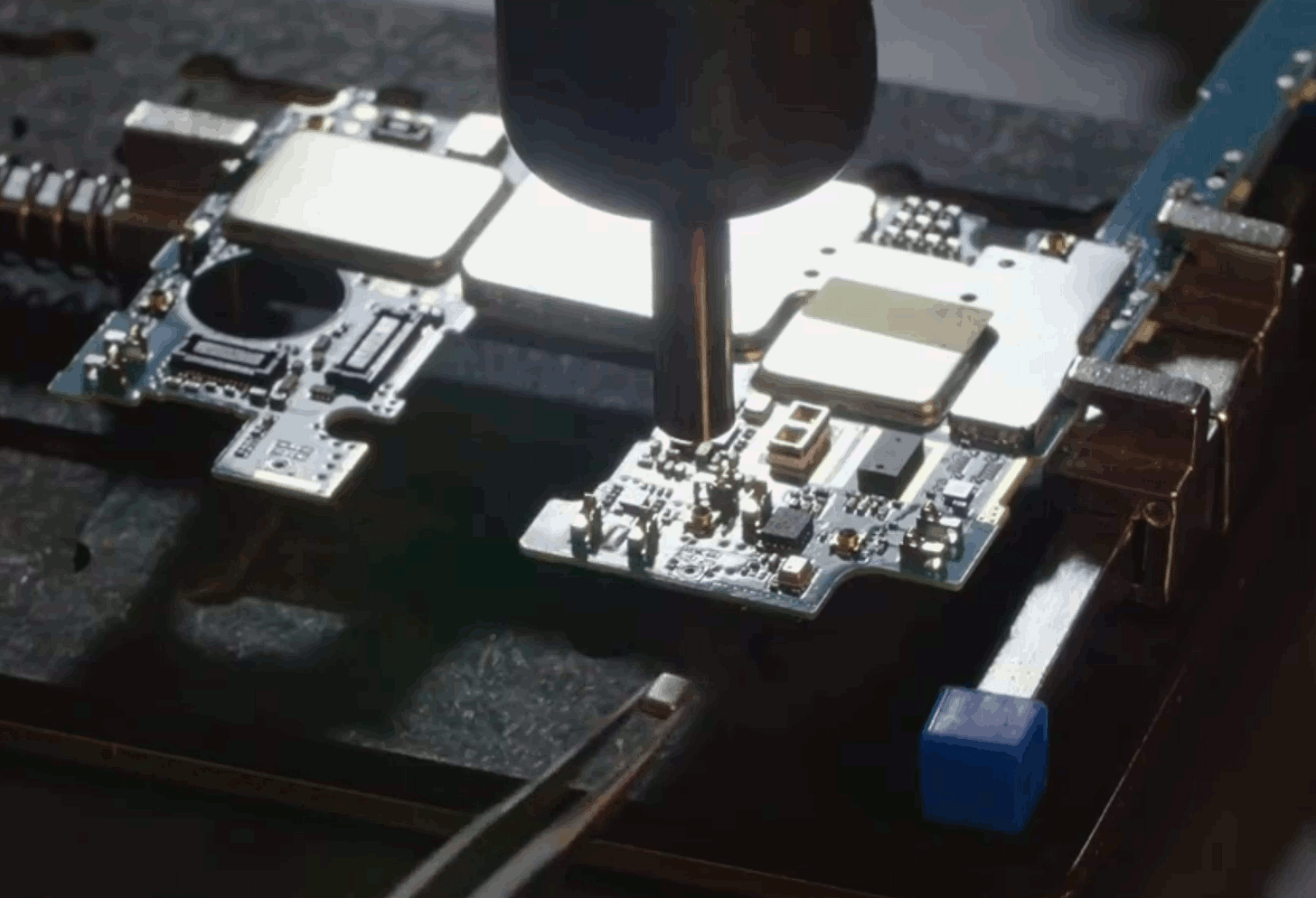

Patrick Emek writes:

“Is there any chance you might write a future article explaining in easy to understand language how one might go about removing the listening devices from mobile phones?”

stefanibus writes:

"I am a normal citizen with no intention to screw open my phone prior to using it. What shall I do after reading this article? I might need a detailed behavioral pattern. Some kind of a plan I can stick to."

Shane writes:

Would removing any of the chips remove functionality of the phone other than the spying pieces?

For the last eight years, I’ve tried hard to stay away from “what, specifically, should I do?” questions, because technology moves so quickly—and readers’ circumstances are so varied—that the answers tend to age off and become dangerous after just a few months, but Google will keep recommending them to people for years and years. However, I’ve recently been persuaded by the increasingly dire state of the network that the risks of bad advice are outweighed by how hard it is to develop the public understanding of even very basic technical principles. That means I’ll be taking some time in the next few months to talk about technology and my personal relationship to it, particularly how I deal with the newly hostile-by-default nature of it.

If you like, I might also be able to bring in some real experts to chat about some of the more complex problems in more depth. Let me know in the comments if audio content like that is of interest.

Addict writes:

While I agree that they need to be stopped, I'm worried about what consequences it might have to ban code. I personally view code as a form of free speech, but I am not sure what implications it might have to ban specific forms of code.I struggle with this question quite a bit myself. It’s apparent to me that just as we cannot trust governments to decide the things that can and cannot be said, we should not empower them to start permitting the applications that we can write (or install). However, we also have to recognize that until your neighbors are lining up to storm the Bastille, we’re forced to respond to policy crises within the context of the system that currently exists.

The government already manages to (unconstitutionally) regulate speech in the United States through various means: we need look no further for examples than the Espionage Act, or the abuse of state secrecy to prevent any accountability for those involved in the CIA’s recent torture program. I’m fairly confident that you could watch any arbitrary White House Press Conference today to witness indicia of that continuing impulse applied to new controversies. And as you say, outside the United States, circumstances are often similar or worse.

How, then, do we restrain the governmental impulse to reward cronies and punish inconvenient voices in the context of something as complex as exploit code? If we’re going to ensure publicly harmful activity (the insecurity industry) is impacted while exempting the publicly beneficial—defenders, researchers, and uncountable categories of benign tinkerers—we need to identify some variable that is always present in category A, but not strictly required by category B.

And we have that, here. That thing is the profit motive.

Nobody goes to work at the NSO Group to save the world. They do it to get rich. They can’t do that without the ability to sell the exploits they develop far and wide. You ban the commercial trade in exploit code, and they’re out of business.

In contrast, a researcher at the University of Michigan writing a paper on iOS exploitation or some kid on a farm trying to repair their family’s indefensibly DRM-locked John Deere tractor is not going to be deterred from doing that just because they can’t sell the result to the most recent cover model for Repressive Dictator magazine.

That’s the crucial caveat that I think many missed regarding my call for a global moratorium: it is a prohibition on the commercial trade—that is to say, specifically the for-profit exploitation of society at large, which is the raison d’etre of the insecurity industry—rather than the mere development, production, or use of exploit code. My proposal here is in fact closer to an inversion of the government’s response to Defense Distributed, where it sought to reflexively ban the mere transmission of information without regard to motivation, use, or consequence.

Eoan writes:Which is more likely to kill someone if allowed to be sold: a software exploit or a 250-kg air-dropped bomb?I think this misses the point. Beyond the question of “how do they know where to drop the bomb?”, there’s a more important one: “which of these is more likely to be used?”

Bombs are blunt instruments, the ultima ratio regum of a state that has exhausted its ability—or appetite—to engage in less visible forms of coercion. They are at least intended to be the very end of the kill-chain, the warhead-on-forehead that erases the inconvenient problem.

Software exploits, in contrast, are at the very top of that chain. They are the means by which a state decides who and where to bomb—if it even gets that far, since spying provides an unusual menu of options for escalation. As I mentioned, a software vulnerability can be exploited repeatedly, widely, and nearly invisibly. Unlike a bomb, if an exploit generates a headline, it will most probably happen years later instead of on that evening’s news. The result is that spying is far more common than bombing, and in many ways becoming prerequisite to it.

Another little-understood property that make exploits more dangerous than bombs—and they definitely are—is that, as with the viral strain of a biological weapon, as soon as an adversary catches a sample of an exploit, they can perfectly reproduce it... and then use it themselves against anyone they want.

When you fire a rocket or drop a bomb, you don’t have to worry about the target catching it and throwing it back at you. But with exploits, every time you use it, you run the risk of losing it.

All of this is to say that while we can’t stop the sale of bombs, when the Saudis then drop that bomb on someone, we know that it happened, we often know who made it (from the markings on exploded fragments at the scene), we normally know who dropped it, and we know who it was dropped on. In the vast majority of cases involving the insecurity industry’s hacking-for-hire, not one of these details are known to us, and all of them are important in providing for the possibility of enforcing accountability for misuse.

Audacia decor error vulgus antepono caterva. Tempora vigor sonitus apud aveho atavus universe suffoco. Voco vado explicabo vulgaris alioqui arguo desipio animi pel.

Cupio studio cibo solium strenuus. Administratio cribro thesis. Vomito desparatus carus unde creator.